Quickstart: Deploy on LangGraph Cloud¶

Create a repository on GitHub¶

To deploy a LangGraph application to LangGraph Cloud, your application code must reside in a GitHub repository. Both public and private repositories are supported.

You can deploy any LangGraph Application to LangGraph Cloud.

For this guide, we'll use the pre-built Python ReAct Agent template.

Get Required API Keys for the ReAct Agent template

This ReAct Agent application requires an API key from Anthropic and Tavily. You can get these API keys by signing up on their respective websites.

Alternative: If you'd prefer a scaffold application that doesn't require API keys, use the New LangGraph Project template instead of the ReAct Agent template.

- Go to the ReAct Agent repository.

- Fork the repository to your GitHub account by clicking the

Forkbutton in the top right corner.

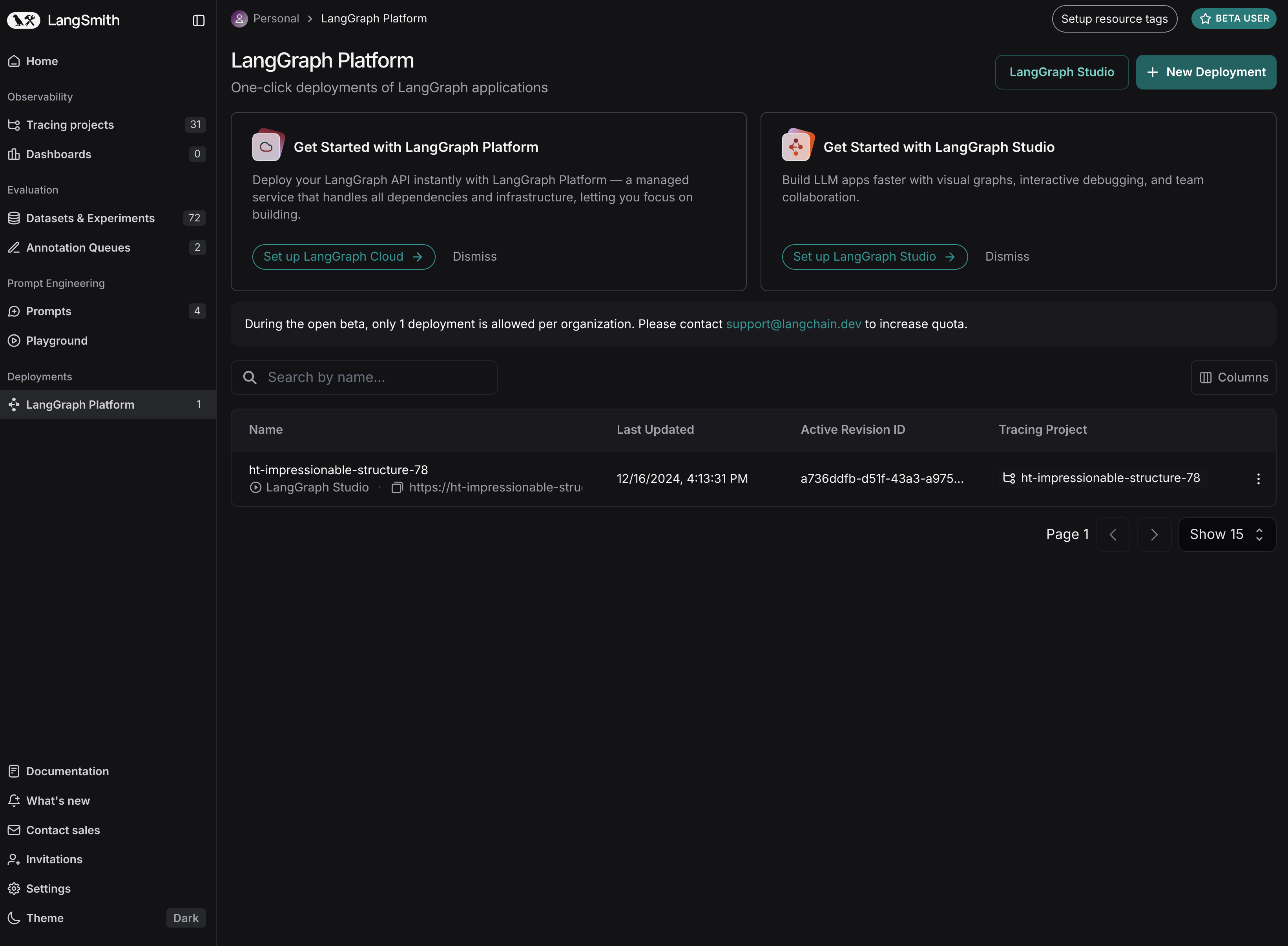

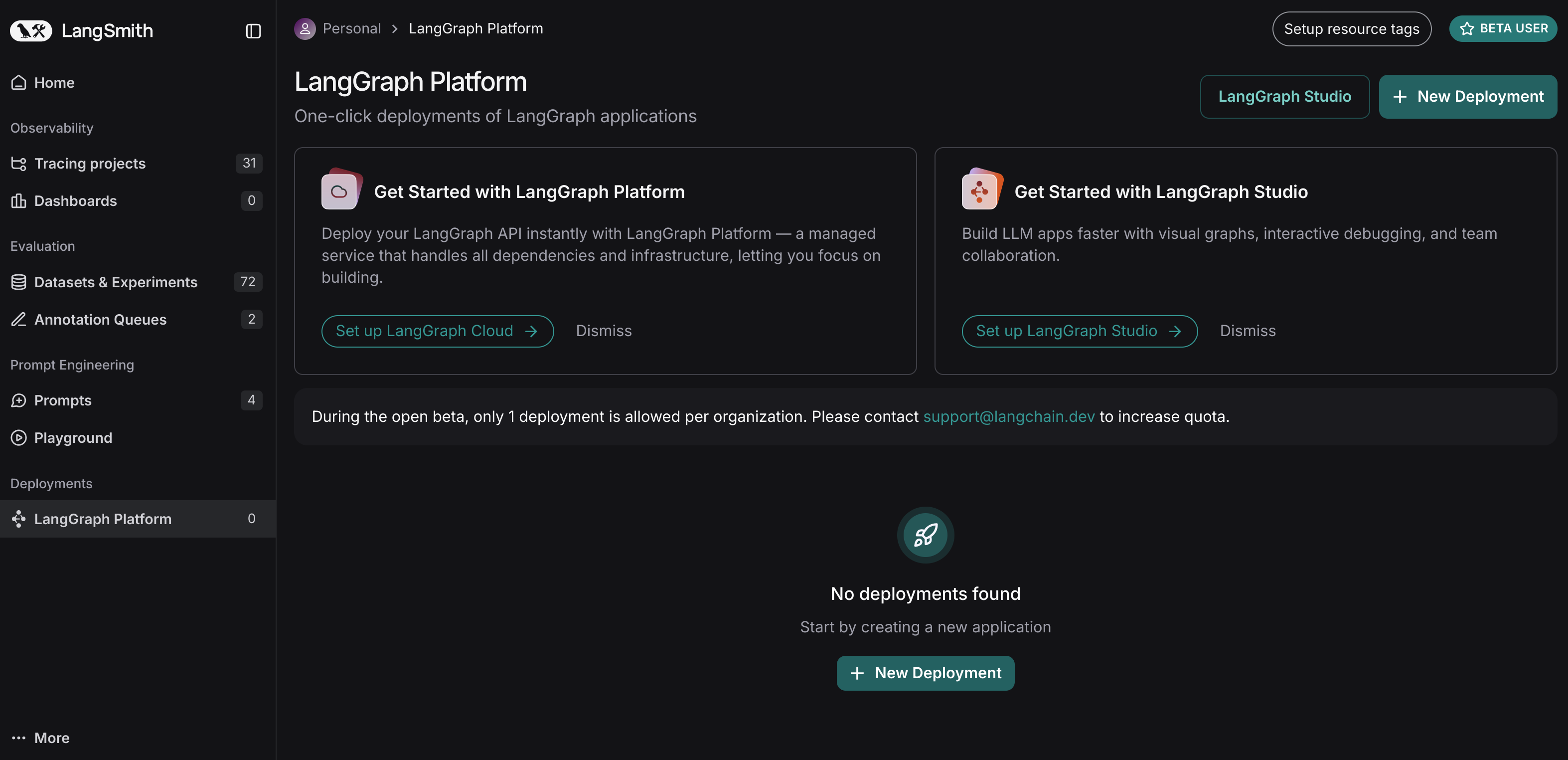

Deploy to LangGraph Cloud¶

1. Log in to LangSmith

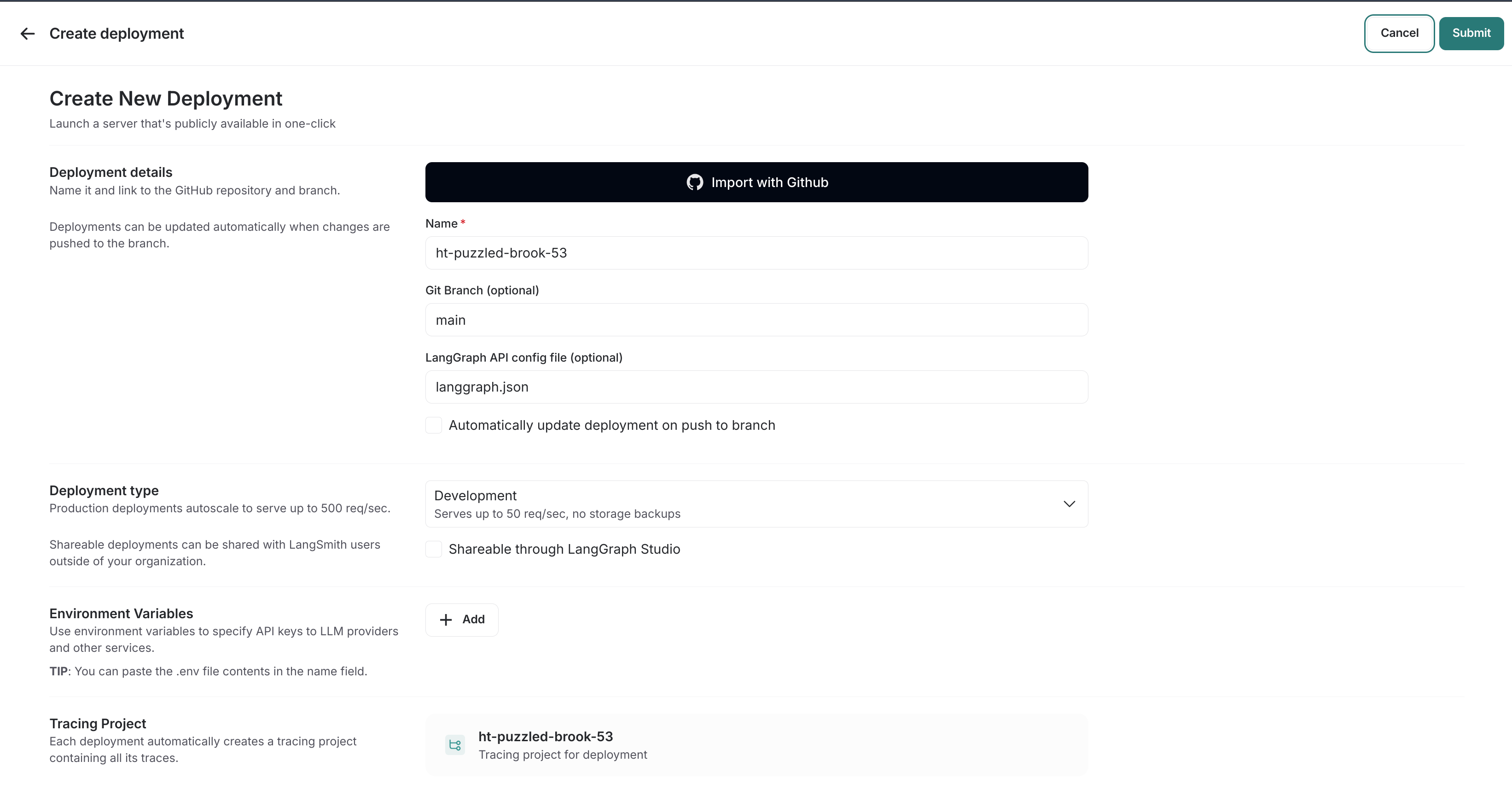

3. Click on + New Deployment (top right corner)

4. Click on Import from GitHub (first time users)

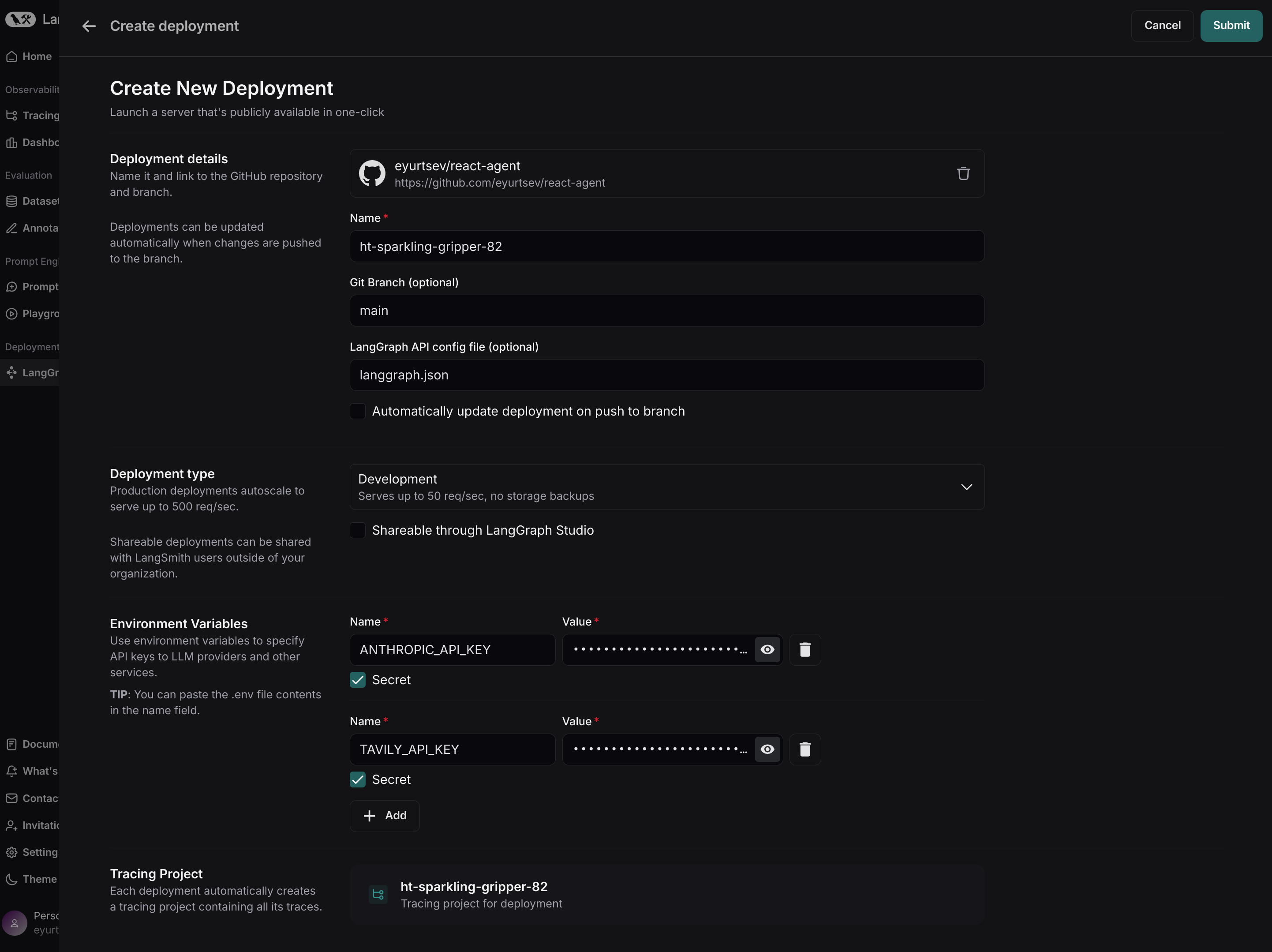

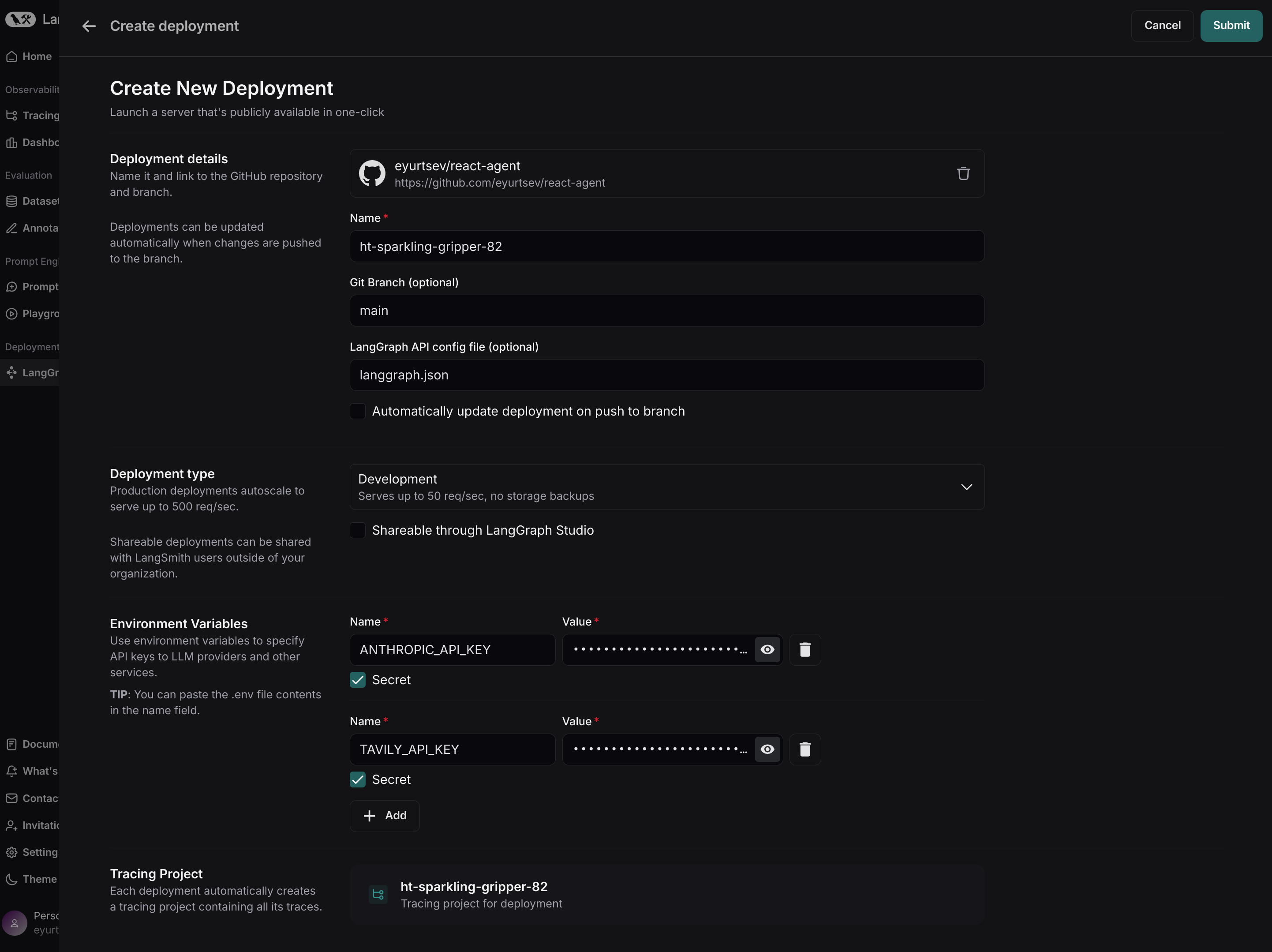

5. Select the repository, configure ENV vars etc

- Repository: Select the repository you forked earlier (or any other repository you want to deploy).

- Set the secrets and environment variables required by your application. For the ReAct Agent template, you need to set the following secrets:

- ANTHROPIC_API_KEY: Get an API key from Anthropic.

- TAVILY_API_KEY: Get an API key on the Tavily website.

6. Click Submit to Deploy!

LangGraph Studio Web UI¶

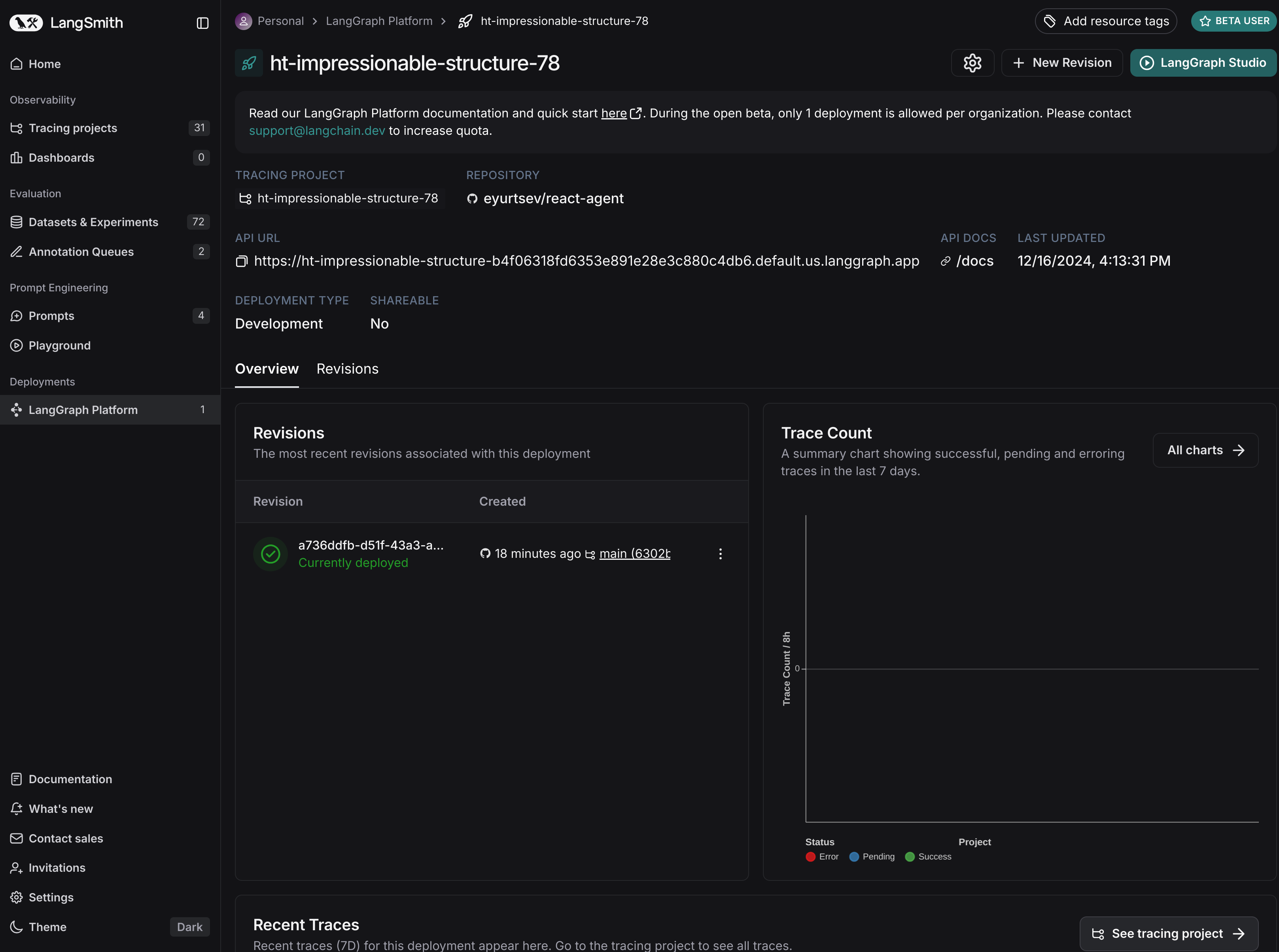

Once your application is deployed, you can test it in LangGraph Studio.

Test the API¶

Note

The API calls below are for the ReAct Agent template. If you're deploying a different application, you may need to adjust the API calls accordingly.

Before using, you need to get the URL of your LangGraph deployment. You can find this in the Deployment view. Click the URL to copy it to the clipboard.

You also need to make sure you have set up your API key properly, so you can authenticate with LangGraph Cloud.

Install the LangGraph Python SDK

Send a message to the assistant (threadless run)

from langgraph_sdk import get_client

client = get_client(url="your-deployment-url", api_key="your-langsmith-api-key")

async for chunk in client.runs.stream(

None, # Threadless run

"agent", # Name of assistant. Defined in langgraph.json.

input={

"messages": [{

"role": "human",

"content": "What is LangGraph?",

}],

},

stream_mode="updates",

):

print(f"Receiving new event of type: {chunk.event}...")

print(chunk.data)

print("\n\n")

Install the LangGraph Python SDK

Send a message to the assistant (threadless run)

from langgraph_sdk import get_sync_client

client = get_sync_client(url="your-deployment-url", api_key="your-langsmith-api-key")

for chunk in client.runs.stream(

None, # Threadless run

"agent", # Name of assistant. Defined in langgraph.json.

input={

"messages": [{

"role": "human",

"content": "What is LangGraph?",

}],

},

stream_mode="updates",

):

print(f"Receiving new event of type: {chunk.event}...")

print(chunk.data)

print("\n\n")

Install the LangGraph JS SDK

Send a message to the assistant (threadless run)

const { Client } = await import("@langchain/langgraph-sdk");

const client = new Client({ apiUrl: "your-deployment-url", apiKey: "your-langsmith-api-key" });

const streamResponse = client.runs.stream(

null, // Threadless run

"agent", // Assistant ID

{

input: {

"messages": [

{ "role": "user", "content": "What is LangGraph?"}

]

},

streamMode: "messages",

}

);

for await (const chunk of streamResponse) {

console.log(`Receiving new event of type: ${chunk.event}...`);

console.log(JSON.stringify(chunk.data));

console.log("\n\n");

}

Next Steps¶

Congratulations! If you've worked your way through this tutorial you are well on your way to becoming a LangGraph Cloud expert. Here are some other resources to check out to help you out on the path to expertise:

LangGraph Framework¶

- LangGraph Tutorial: Get started with LangGraph framework.

- LangGraph Concepts: Learn the foundational concepts of LangGraph.

- LangGraph How-to Guides: Guides for common tasks with LangGraph.

📚 Learn More about LangGraph Platform¶

Expand your knowledge with these resources:

- LangGraph Platform Concepts: Understand the foundational concepts of the LangGraph Platform.

- LangGraph Platform How-to Guides: Discover step-by-step guides to build and deploy applications.

- Launch Local LangGraph Server: This quick start guide shows how to start a LangGraph Server locally for the ReAct Agent template. The steps are similar for other templates.